TU Wien Scientists Make Uncertainty in AI Measurable

A team at TU Wien, composed of Dr. Andrey Kofnov, Dr. Daniel Kapla, Prof. Ezio Bartocci, and Prof. Efstathia Bura, has developed an innovative method to measure and control uncertainty in artificial intelligence (AI) systems. Their approach offers mathematical guarantees on how safely a neural network operates within defined input ranges, helping to prevent certain types of errors.

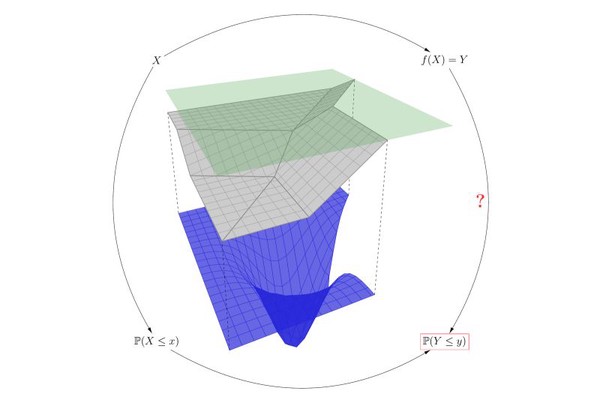

AI technologies are already deeply integrated into our daily lives—from smartphones to self-driving cars. However, even small changes in input data, such as slight image distortions or background noise, can sometimes lead to unexpected or unsafe decisions. This new method makes it possible to predict and precisely limit how much AI outputs can vary, even when inputs are uncertain.

The TUW researchers use a geometric approach, treating all possible inputs as a high-dimensional space. By systematically dividing this space, they can accurately calculate the range of outputs a neural network can produce and mathematically rule out certain errors. Although the method is not yet suitable for very large AI models, it already works effectively for smaller neural networks and represents an important step toward more trustworthy, explainable, and safer AI systems.

The research was conducted within TU Wien’s DC SecInt, which fosters interdisciplinary collaboration in the field of secure, reliable, and ethically responsible technology. The results will be presented at the 42nd International Conference on Machine Learning (ICML 2025), one of the world’s leading conferences on machine learning.

The original scientific publication is available here. A full article with commentary from the authors is available in both German and English.